Converting to Delta Lake

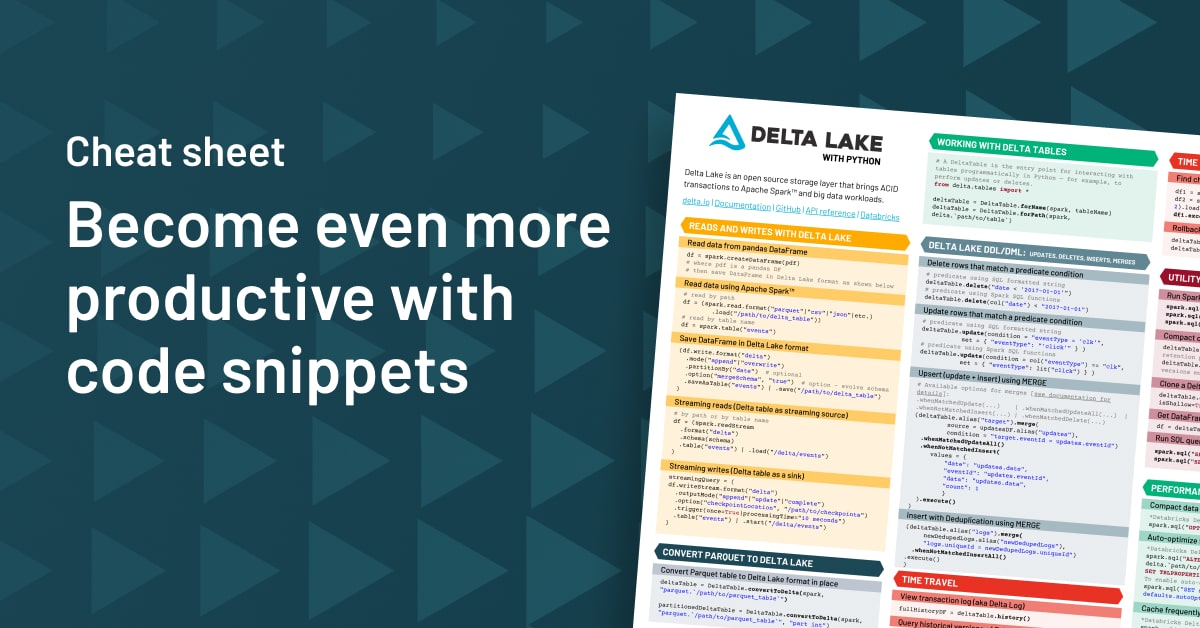

To get started with Delta Lake, simply say “Delta” instead of “Parquet”, when writing out your tables with Spark. You can also use Spark SQL to create a Delta Lake table from scratch with the CREATE TABLE USING DELTA command. Or you can use the CONVERT TO DELTA command to convert your existing Parquet files to Delta Lake format in place.

Now that we’ve converted our data to Delta Lake, let’s look at the data in our table. We have 14,705 batch records in this table. And you can see here what the data actually looks like. Next, I’ll show you how Delta Lake is able to handle batch and streaming data with ease, by setting up two streaming writes to our Delta Lake table alongside two streaming reads that will happen simultaneously.

Multiple concurrent streaming reads and writes with Delta Lake

Here, we’ve gotten those reads and writes started. So as you can see in this chart, the initial 14,705 batch records in our table are still present. But new data is now streaming in from Stream A and Stream B at around 500 Records per second each. Meanwhile, Delta Lake can also serve consistent views of this table to our streaming read queries, which are powering the visualizations below.

In this visualization, you can see the new data streaming into our table over time. Each new bar represents a 10 second window, during which both stream A and stream B are writing to our table concurrently. And again, this visualization is powered by a streaming read from our table as well. Finally, just for good measure, we can throw in a batch query.

So what this shows is that Delta Lake tables can easily handle multiple readers and writers of both batch and streaming data all at once.

ACID Transactions

So how is all of this possible? It’s possible because of ACID transactions. Delta Lake uses a transaction log that serves as a master record of all changes made to each table. That also determines which files are part of our table at all times. You can view the transaction log at any time by running the DESCRIBE HISTORY command as seen here.

When we run this command, you can see that each write to our table has been recorded atomically as a streaming update. Once a new write is recorded in the transaction log, all of our downstream readers will now include that write transaction in their view of the table immediately. So everyone always agrees on what constitutes a Delta Lake table at all times. They simply refer to the transaction log.

Simplifying your data architecture with Delta Lake

This all makes Delta Lake tables really powerful. And at Databricks, we’ve found that many of our customers are able to simplify and streamline their overall data architectures using Delta Lake. By building a simple multi-hop data pipeline with Delta Lake tables, you can reliably transform raw batch and streaming data into high quality, structured data that multiple downstream apps and users can query at once. And what this means from a business ROI perspective is lower cloud computing costs, less complexity to manage, and less time wasted on fixing systems issues like corrupted data.

Schema enforcement and evolution

But Delta Lake does a lot more than just use ACID transactions to combine batch and streaming data. It also offers tools like schema enforcement to protect the quality of the data in your data tables. Without schema enforcement, data with mismatching schemas can change your table schema and break your entire data pipeline, causing cascading failures downstream. So we use schema enforcement to ensure that that doesn’t happen.

But schema enforcement alone is not enough. In the event that we do want to change our table schema, we also need schema evolution. With Delta Lake, we evolve our schema quickly and easily by simply adding the mergeSchema option to our Spark write command. And when we run this command, we can see that the write command that previously failed has now occurred successfully. And the new credit score column is now present in our new table.

Time travel

Another major feature of Delta Lake is the ability to travel back in time using time travel, also known as data versioning. Because every change to our table is recorded as an atomic transaction in the transaction log, we can use this information to recreate the exact state of our table at any point in time.

Time Travel helps you avoid making irreversible changes to your tables. It makes your data sets and experiments reproducible, and offers a verifiable data lineage for audit and governance purposes.

So let’s first review the transaction log using the DESCRIBE HISTORY command that we saw earlier. And as you can see, each version of our table is saved by version number and by timestamp. So we can use this information to do things like query historical versions of our table.

So let’s go ahead and do that. By adding the VERSION AS OF command to our SQL query, our query runs on the very first version of our data set — version 0 — and returns a count of 14,705, the same number of records that were originally present in our table.

Taking time travel one step further, we can roll back our table at any time using the RESTORE command shown here.This is really useful if we decide that we’ve made a change to our table that we want to completely undo, and simply roll back our data to an earlier version.

So as you can see, now when we query our table, those original 14,705 batch records are the only records present in our table. Version 0 has become the current version.

Delta Lake DML: UPDATE, MERGE, DELETE

The next Delta Lake feature I want to demo for you is full support for transactional DML commands like UPDATE, MERGE, and DELETE. These are the SQL commands that make manipulating big data tables quick and easy. Before Delta Lake, deleting a user’s data from a data lake to comply with a GDPR request was difficult to perform without running the risk of data loss or corruption. But with Delta Lake, we can delete a user’s data transactionally, in just one line of code.

First, let’s view the user’s data. We’ll choose the user with loan ID 4420. And now when we run this delete command, you can see that our users data is successfully deleted transactionally.

The same idea applies with other DML commands like INSERT, UPDATE, and MERGE. Delta Lake makes these operations really simple to perform with minimal code, all backed by the reliability of ACID transactions.

So let’s go ahead and use time travel to insert that user’s data back into our table. And when we run the command, we can see that now In fact, there is a record in our table with loan ID 4420.

Next, let’s go ahead and update our user’s data with a single command as well. Here, we’re going to set the funded amount equal to 22,000, for the same user as before, number 4420. After running the command, we see that the user’s funded amount has been updated successfully, since it now equals 22,000.

Finally, Delta Lake also supports UPSERTs, which are a mix of inserts and updates, using the MERGE command. Normally, merges are a difficult, expensive operation that involves several intermediate steps. With Delta Lake, we can skip all of that complexity, and simply use the MERGE command.

So first, we’ll create some dummy data to merge — one row with an update of user 4420’s data, and one row of new data to insert in our table. So when we put together our MERGE command, we can specify that we want to UPDATE the table when the record already exists, and insert it when it doesn’t. So when we run the merge command, as you can see, we successfully updated user 4420’s data, and inserted our new user’s data.

Databricks performance optimizations

Finally, before wrapping up, I want to show you just a couple of additional commands that improve performance when working with big Delta Lake tables. First, there’s the VACUUM command. The VACUUM command allows us to mark any data files that are no longer being used by the current version of our table for deletion.

The next couple of commands are only available with Delta Lake on Databricks. First, there’s the CACHE command, which allows you to cache the results of any frequently used query in memory to speed up that query in the future.

And finally, there’s the Z ORDER OPTIMIZE command shown here, which uses advanced multidimensional data clustering techniques to co-locate related data for the fastest queries possible. Z-Order significantly speeds up many queries and reduces cloud costs by enabling more efficient reads of our data.

So that concludes our hands-on demo of Delta Lake. Try Delta Lake on Databricks today, and join the community online to learn more about how Delta Lake provides the perfect foundation for your lakehouse architecture.