Announcing CARTO’s Spatial Extension for Databricks — Powering Geospatial Analysis for JLL

This is a collaborative post by Databricks and CARTO. We thank Javier de la Torre, Founder and Chief Strategy Officer at CARTO for his contributions. Today, CARTO is announcing the beta launch of their new product called the Spatial Extension for Databricks, which provides a simple installation and seamless integration with the Databricks Lakehouse...

Introduction to Databricks and PySpark for SAS Developers

This is a collaborative post between Databricks and WiseWithData. We thank Founder and President Ian J. Ghent, Head of Pre-Sales Solutions R &D Bryan Chuinkam, and Head of Migration Solutions R&D Ban (Mike) Sun of WiseWithData for their contributions. Technology has come a long way since the days of SAS®-driven data and analytics workloads....

Introducing Data Profiles in the Databricks Notebook

Before a data scientist can write a report on analytics or train a machine learning (ML) model, they need to understand the shape and content of their data. This exploratory data analysis is iterative, with each stage of the cycle often involving the same basic techniques: visualizing data distributions and computing summary statistics like row...

Deploying dbt on Databricks Just Got Even Simpler

At Databricks, nothing makes us happier than making our users more productive, which is why we are delighted to announce a native adapter for dbt. It’s now easier than ever to develop robust data pipelines on Databricks using SQL. dbt is a popular open source tool that lets a new breed of ‘analytics engineer’ build...

Scala at Scale at Databricks

With hundreds of developers and millions of lines of code, Databricks is one of the largest Scala shops around. This post will be a broad tour of Scala at Databricks, from its inception to usage, style, tooling and challenges. We will cover topics ranging from cloud infrastructure and bespoke language tooling to the human processes...

The Foundation of Your Lakehouse Starts With Delta Lake

It’s been an exciting last few years with the Delta Lake project. The release of Delta Lake 1.0 as announced by Michael Armbrust in the Data+AI Summit in May 2021 represents a great milestone for the open source community and we’re just getting started! To better streamline community involvement and ask, we recently published...

Tackle Unseen Quality, Operations and Safety Challenges With Lakehouse Enabled Computer Vision

Globally, out-of-stocks cost retailers an estimated $1T in lost sales. An estimated 20% of these losses are due to phantom inventory, the misreporting of product units actually on-hand. Despite technical advances in inventory management software and processes, the truth is that most retailers still struggle to report accurate unit counts without employees manually performing a...

How DPG Delivers High-quality and Marketable Segments to Its Advertisers.

This is a guest authored post by Bart Del Piero, Data Scientist, DPG Media. At the start of a campaign, marketers and publishers will often have a hypothesis of who the target segment will be, but once a campaign starts, it can be very difficult to see who actually responds, abstract a segment based on...

Announcing Databricks Seattle R&D Site

Today, we are excited to announce the opening of our Seattle R&D site and our plan to hire hundreds of engineers in Seattle in the next several years. Our office location is in downtown Bellevue. At Databricks, we are passionate about enabling data teams to solve the world's toughest problems — from making the next...

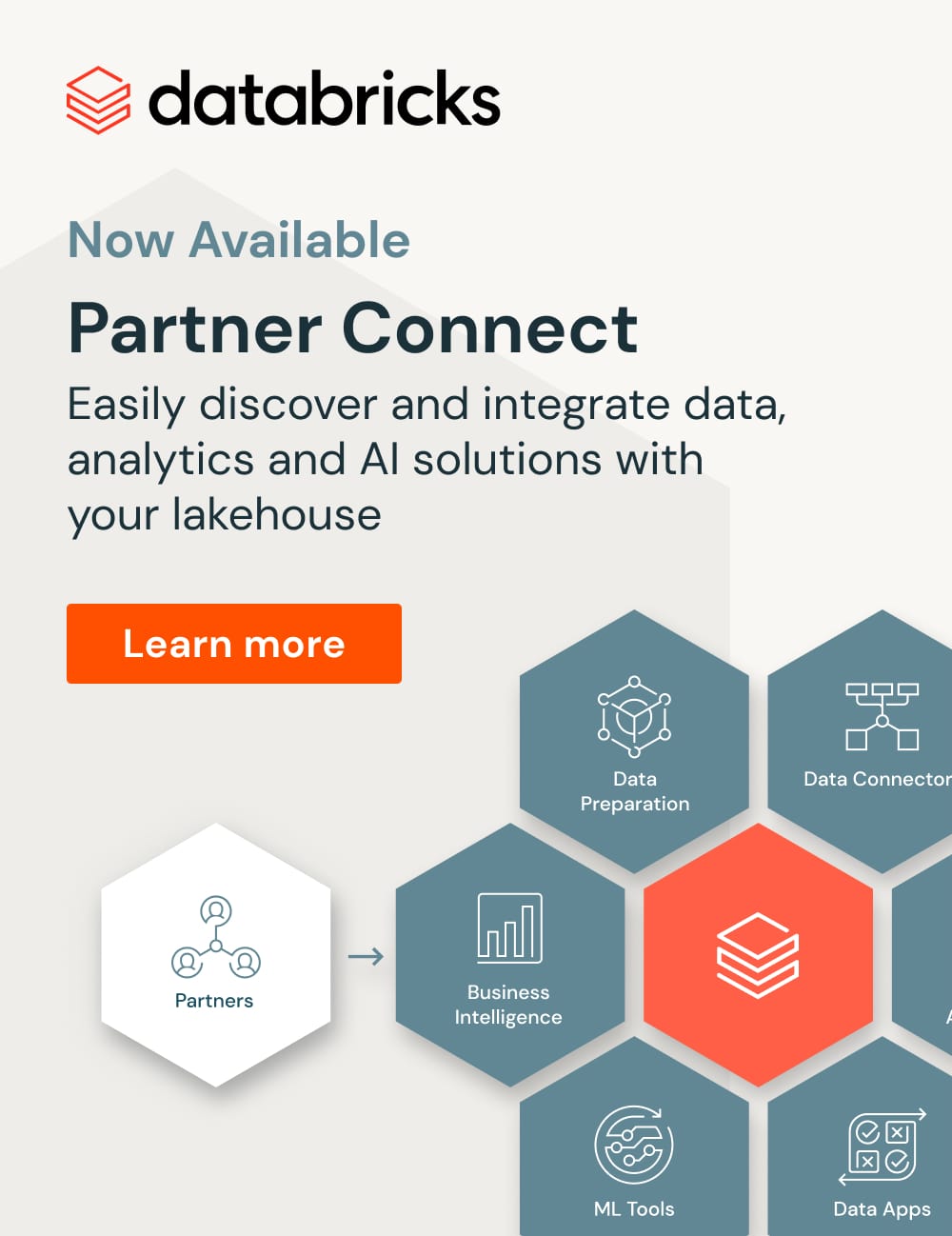

Building Analytics on the Lakehouse Using Tableau With Databricks Partner Connect

This is a guest authored post by Madeleine Corneli, Sr. Product Manager, Tableau On November 18, Databricks announced Partner Connect, an ecosystem of pre-integrated partners that allows customers to discover and connect data, analytics and AI tools to their lakehouse. Tableau is excited to be among a set of launch partners to be featured...