Migrating to Databricks helps accelerate innovation, enhance productivity and manage costs better with faster, more efficient infrastructure and DevOps

Discover the benefits of migrating from Hadoop to the Databricks Lakehouse Platform — one open, simple platform to store and manage all your data for all your analytics workloads.

While reducing infrastructure and licensing costs is one major advantage, the Databricks Lakehouse Platform takes a lake-first approach to increase the speed and scale to handle all your production analytics and AI use cases — helping you meet your SLAs, streamline operations and improve productivity.

Why Migrate with Databricks?

Forrester TEI Study finds 417% ROI for companies switching to Databricks.

47%

Cost-savings from retiring

legacy infrastructure

Retire legacy infrastructure and adopt an open and elastic cloud-native service that doesn’t require excess capacity or hardware upgrades.

5%

Increase in revenue with

Data-driven innovation

Use all enterprise data to build new data products and increase operational efficiencies with powerful artificial intelligence and machine learning capabilities.

25%

Increase in data team

productivity

Minimize the DevOps burden with a fully-managed, performant and reliable data and analytics platform.

Cloud-based

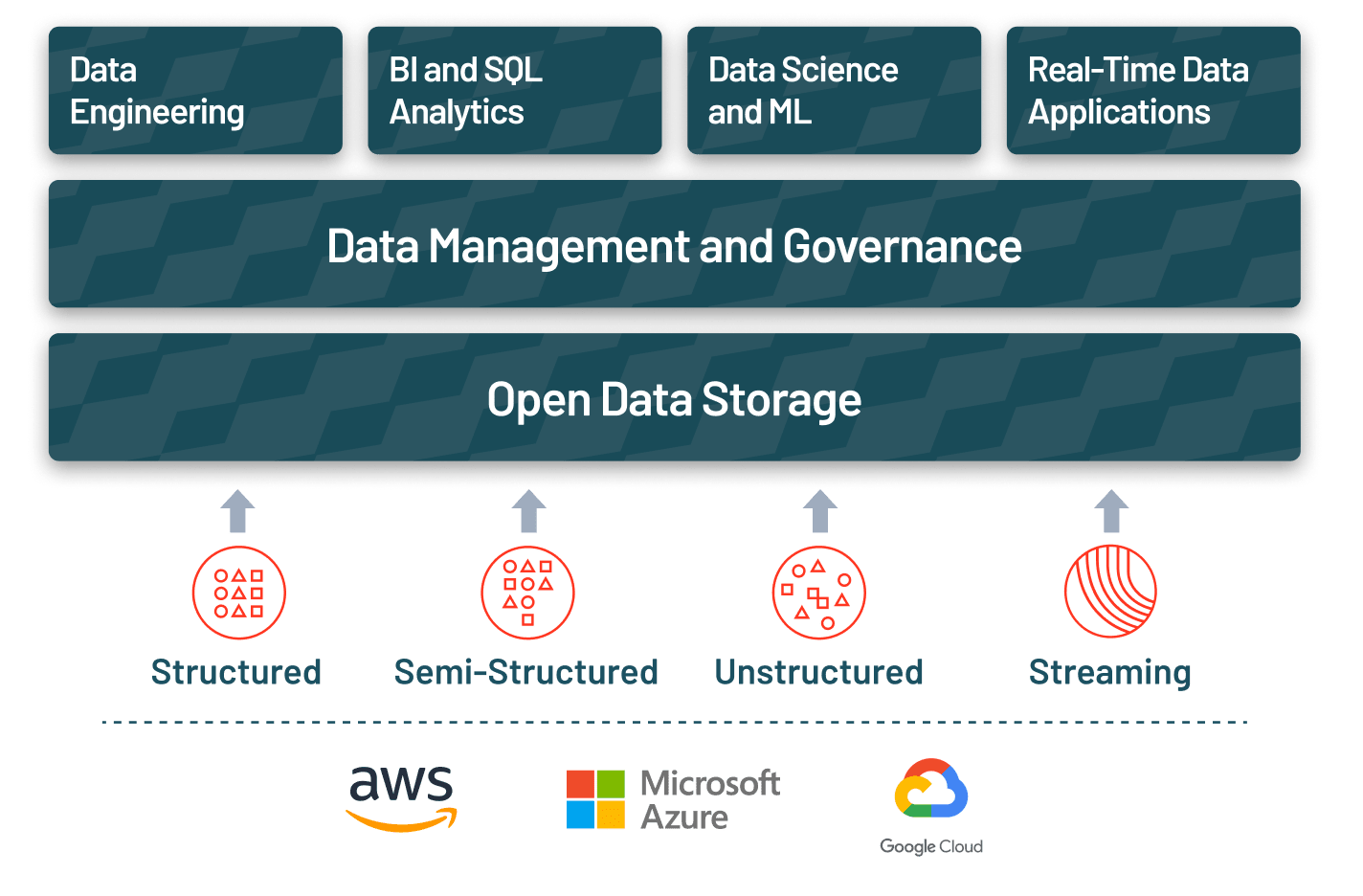

Build an open, simple and collaborative lakehouse architecture with Databricks

Cost-effective scale and performance in the cloud

Lakehouse

Build an open, simple and collaborative lakehouse architecture with Databricks

Databricks Lakehouse Platform

Simple

Unify your data, analytics, and AI on one common platform for all data use cases

Open

Unify your data ecosystem with open source, standards, and formats

Collaborative

Unify your data teams to collaborate across the entire data and AI workflow

Technology mapping Hadoop to Databricks

(Spark)

(Highly tuned Spark engine: faster, less

compute, one-stop-shop)

(Hive, Impala)

(Highly tuned Spark engine: faster, less

compute, one-stop-shop)

(Storm/Spark)

(Spark Structured Streaming + Delta Lake:

Streaming + Batch ingest)

(MapReduce)

(orders of magnitude faster – but may need

manual work)

(Hbase)

w/Hbase on cloud

(Alternatively: use cloud data stores well

integrated with Databricks)

Partner ecosystem

Featured partners

Ready to get started?

Resources

Blogs & Whitepapers

- It’s Time to Re-evaluate Your Relationship With Hadoop

- Hadoop to Databricks Technical Migration Guide

- Top Considerations When Migrating Off of Hadoop

- 5 Key Steps to Successfully Migrating from Hadoop to the Lakehouse Architecture

- Why Scribd Chose Delta Lake

- Forrester TEI Spotlight – Databricks Lakehouse Platform