Amazon SageMaker integration with Databricks

Integrate strong MLOps of Databricks with Amazon SageMaker for model distribution

Features

Databricks incorporates MLflow for machine learning lifecycle management

MLflow Tracking

Record and query data science experiments

MLflow Projects

Package data science code in a reproducible format to run on any platform

MLflow Models

Deploy machine learning models in diverse serving environments

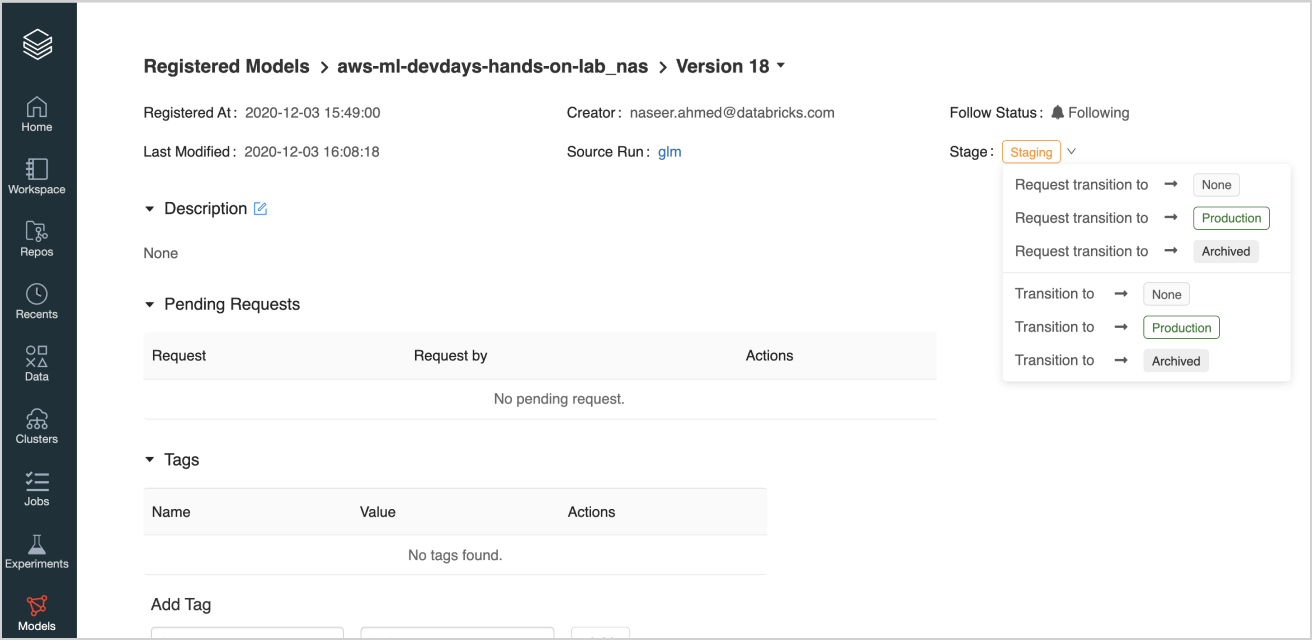

MLflow Model Registry

Store, annotate and discover models in a central repository

Benefits

Production-ready

With Amazon SageMaker, you can directly deploy your models into a production-ready hosted environment with a few clicks. SageMaker will provide an HTTPS endpoint that you can integrate into your web applications. A great example is a website that predicts what products to suggest to a shopper. The model can be applied to the person and their selections and provide a next predicted item, which the website can then convert into a web page offer.

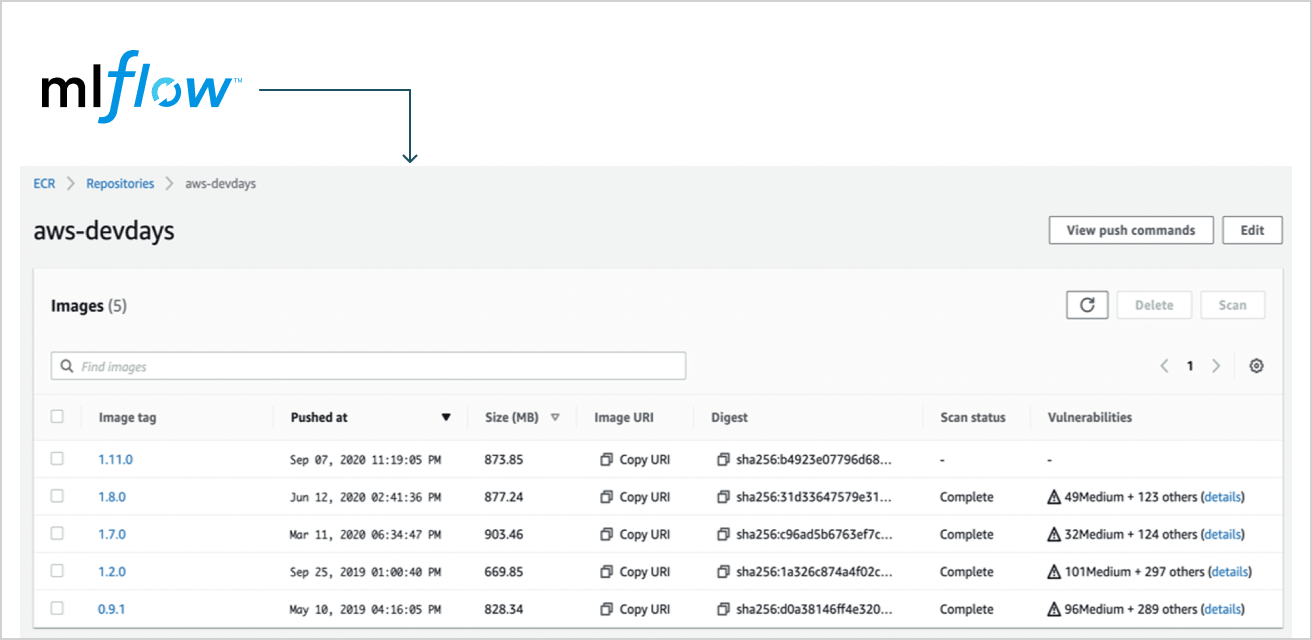

Build

To integrate MLflow and Amazon SageMaker, build a new MLflow SageMaker image, assign it a name, and push to ECR. This function builds an MLflow Docker image. The image is built locally, and it requires Docker to run. The image is pushed to ECR under the currently active AWS account and to the currently active AWS region.

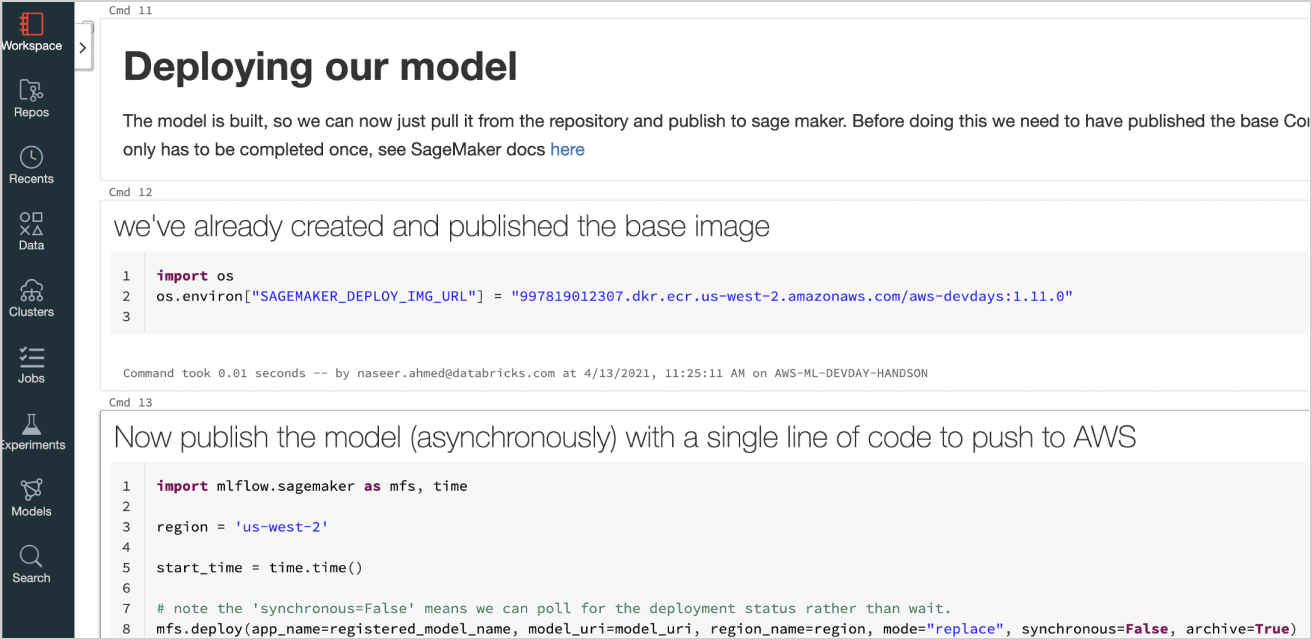

Deploy

To deploy a model on SageMaker as a REST API endpoint, the currently active AWS account needs to have the correct permissions set up. By default, unless the async flag is specified, this command will block until either the deployment process completes (definitively succeeds or fails) or the specified time-out elapses.

For more information about the input data formats accepted by the deployed REST API endpoint, see this documentation.