Cataloging data for a lakehouse

Providing seamless access across the platform requires a strong catalog server

Using AWS Glue as a catalog for Databricks

To discover data across all your services, you need a strong catalog to be able to find and access data. The AWS Glue service is an Apache-compatible Hive serverless metastore that allows you to easily share table metadata across AWS services, applications or AWS accounts. Databricks and Delta Lake are integrated with AWS Glue to discover data in your organization and to register data in Delta Lake and to discover data between Databricks instances.

Benefits

Databricks comes pre-integrated with AWS Glue

Simple

Simplifies manageability by using the same AWS Glue catalog across multiple Databricks workspaces.

Secure

Integrated security by using Identity and Access Management Credential Pass-Through for metadata in AWS Glue. For a detailed explanation, see the Databricks blog introducing Databricks AWS IAM Credential Pass-Through.

Collaborative

Provides easier access to metadata across the Amazon services and access to data cataloged in AWS Glue.

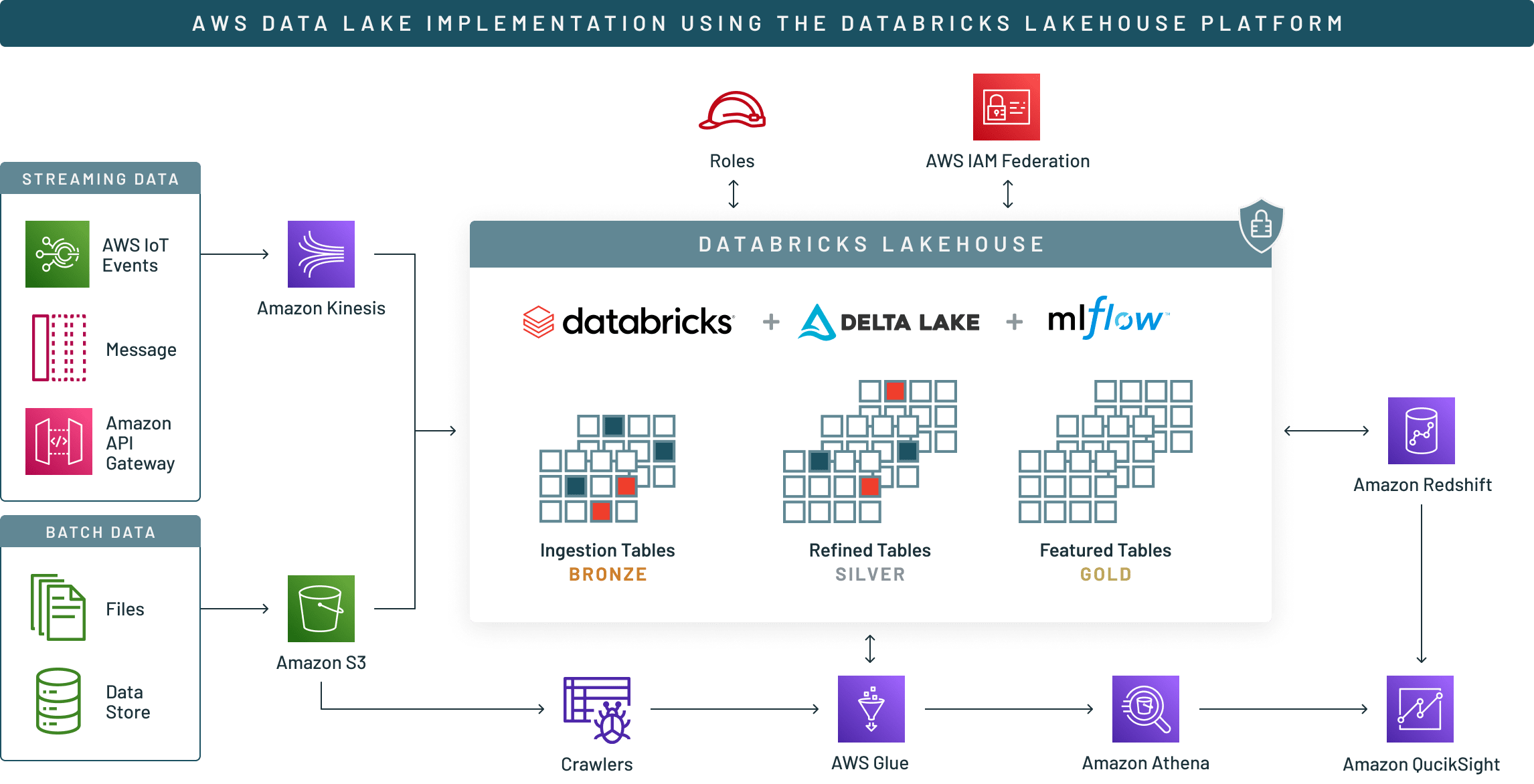

Databricks Delta Lake integration with AWS core services

This reference implementation illustrates the uniquely positioned Databricks Delta Lake integration with AWS core services to help you solve your most complex data lake challenges. Delta Lake runs on top of S3, and it is integrated with Amazon Kinesis, AWS Glue, Amazon Athena, Amazon Redshift and Amazon QuickSight, just to name a few.

If you are new to Delta Lake, you can learn more here.

Integrating Databricks with AWS Glue

STEP 1

How to configure a Databricks cluster to access the AWS Glue Catalog

Launch

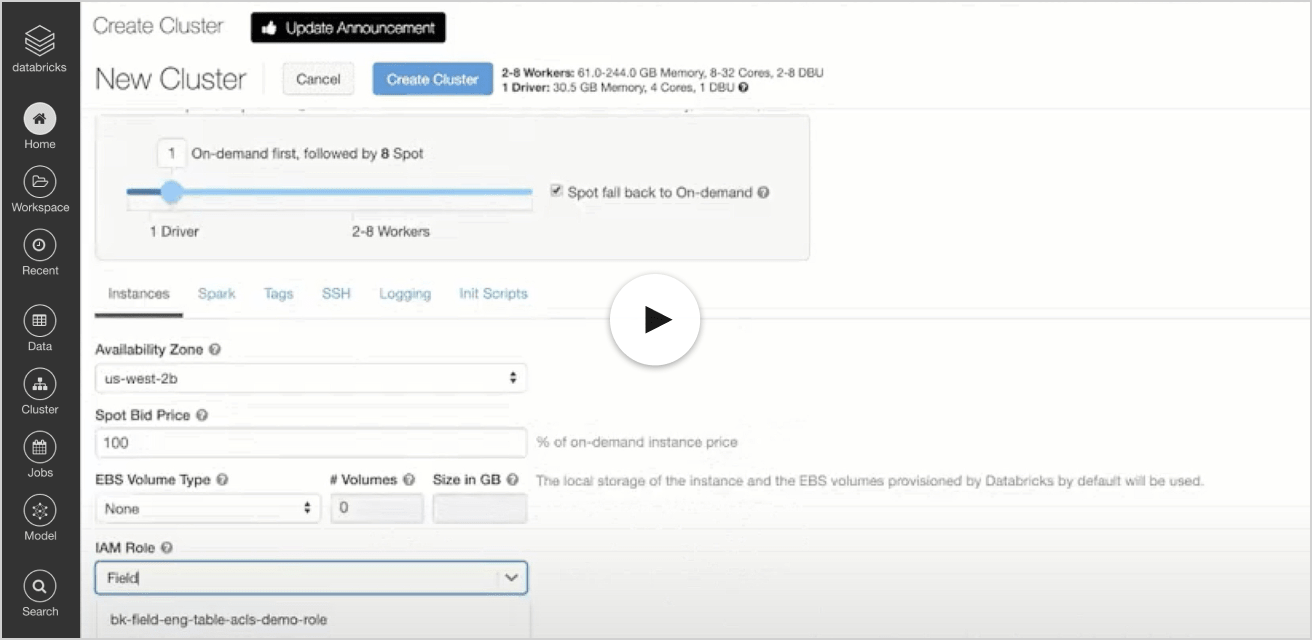

First launch the Databricks computation cluster with the necessary AWS Glue Catalog IAM role. The IAM role and policy requirements are clearly outlined in a step-by-step manner in the Databricks AWS Glue as Metastore documentation.

In this example, create an AWS IAM role called Field_Glue_Role, which also has delegated access to my S3 bucket. Attach the role to the cluster configuration, as depicted in the demo video.

Update

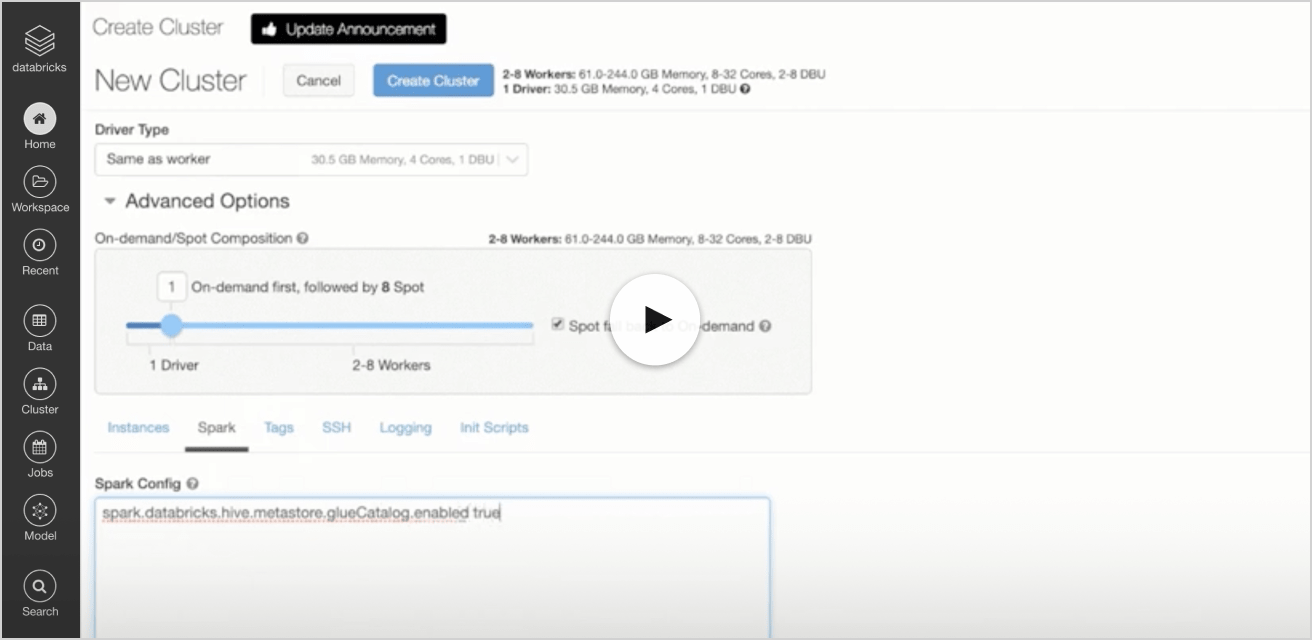

Next, the Spark Configuration properties of the cluster configuration must be set prior to the cluster startup as shown in the how to update video.

STEP 2

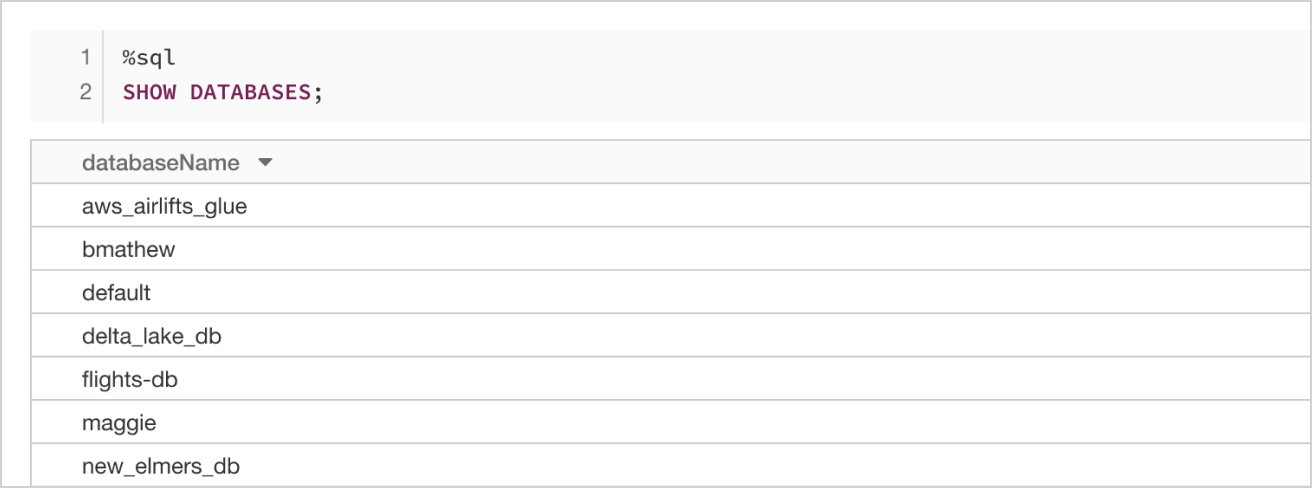

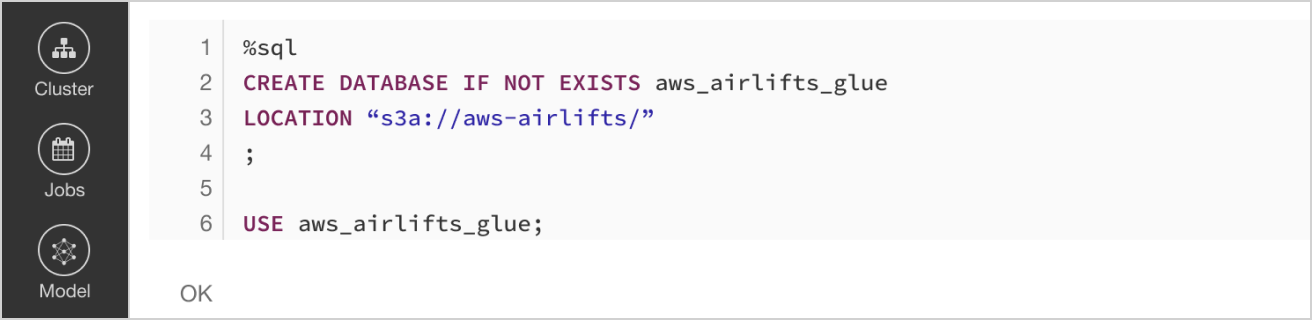

HSetting up the AWS Glue database using a Databricks notebook

STEP 3

Create a Delta Lake table and manifest file using the same metastore

Create and catalog

Create and catalog the table directly from the notebook into the AWS Glue data catalog. Refer to Populating the AWS Glue data catalog for creating and cataloging tables using crawlers.

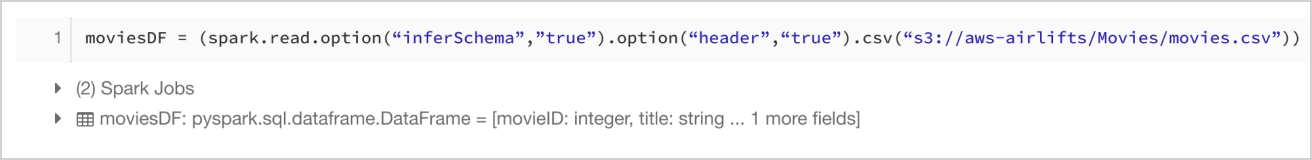

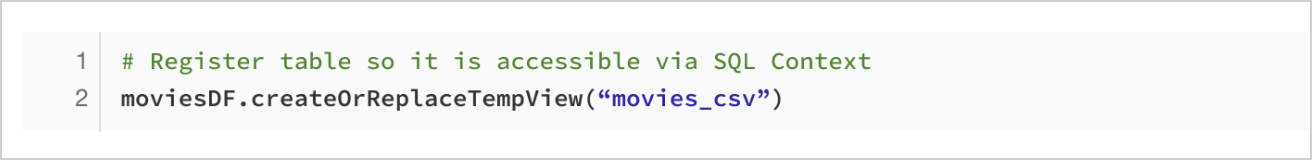

The demo data set here is from a movie recommendation site called MovieLens, which is comprised of movie ratings. Create a DataFrame with this python code.

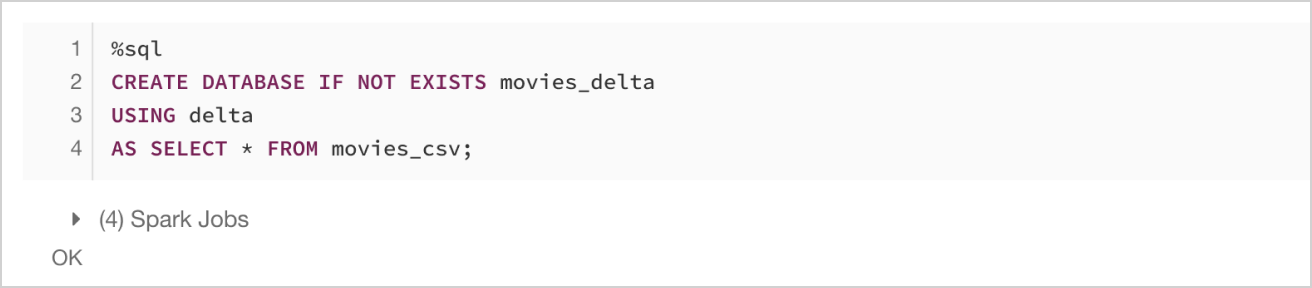

Delta Lake

Now create a Delta Lake table using the temporary table created in the previous step and this SQL command.

Note: It’s very easy to create a Delta Lake table as described in the Delta Lake Quickstart Guide

Generating a manifest for Amazon Athena

Now generate the manifest file required by Amazon Athena using the following steps.

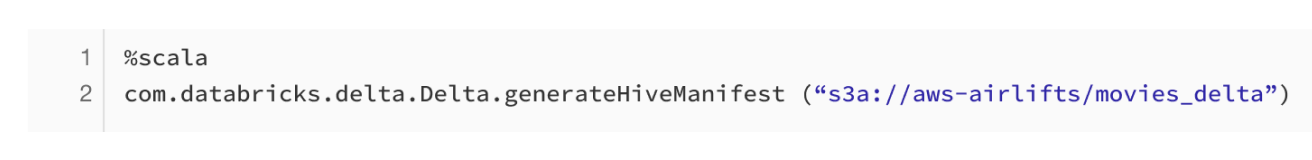

1. Generate manifests by running this Scala method. Remember to prefix the cell with %scala if you have created a python, SQL or R notebook.

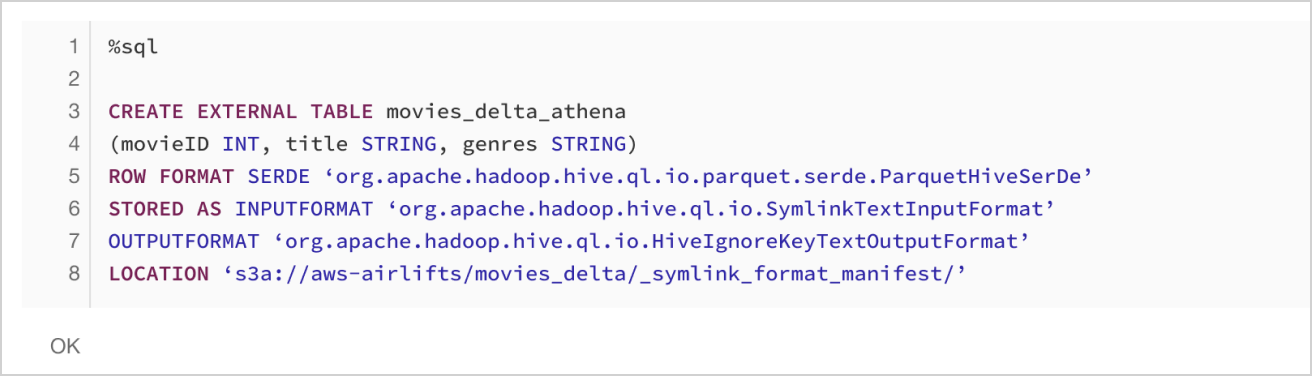

2. Create a table in the Hive metastore connected to Athena using the special format SymlinkTextInputFormat and the manifest file location.

In the sample code, the manifest file is created in the s3a://aws-airlifts/movies_delta/_symlink_format_ manifest/ file location.

STEP 4

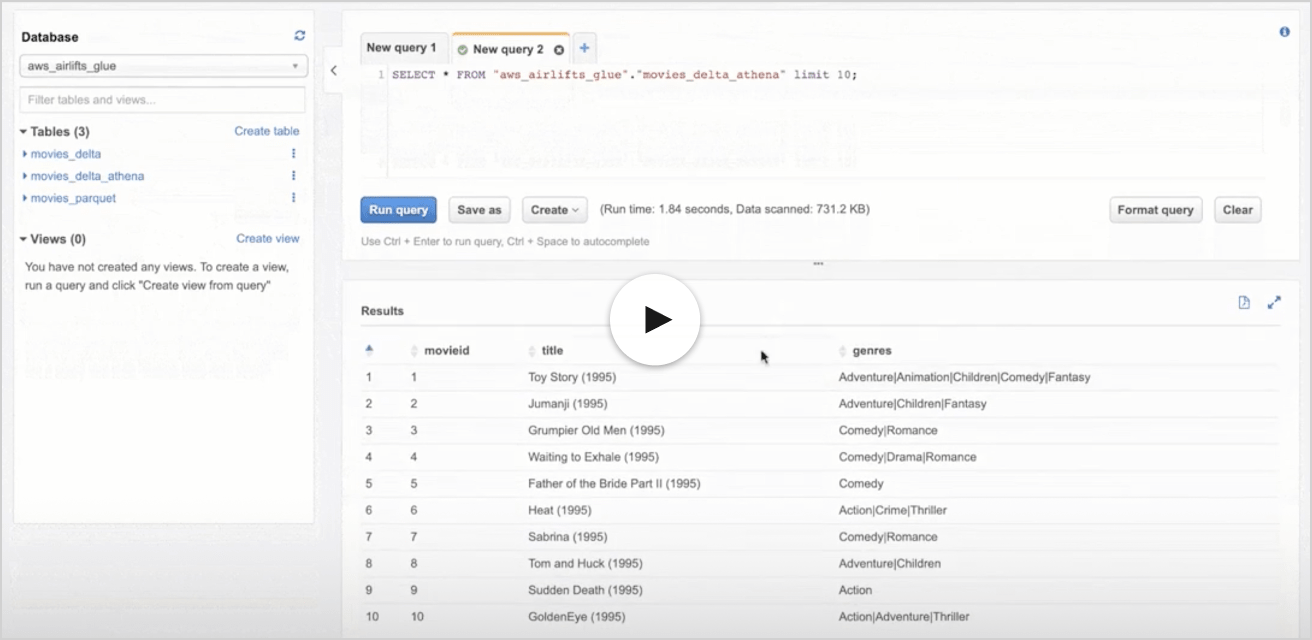

Query the Delta Lake table using Amazon Athena

Conclusion

Integrating AWS Glue provides a powerful serverless metastore strategy for all enterprises using the AWS ecosystem. Elevate the reliability of data lakes with Delta Lake and provide seamless, serverless data access by integrating with Amazon Athena. The Databricks Lakehouse Platform powers the data lake strategy on AWS that enables data analysts, data engineers and data scientists to get performant and reliable data access.